Game Audio Paper

“Game AudioRules”

StevenMachen

LeedsMetropolitan University

5 EarlsCourt, Doncaster

DN4 5EZ

+44795411901

s.machen4922@student.leedsmet.ac.uk

Abstract

In this paper the author will present a criticalevaluation of the unique challenges presented by interactive audio.

1.Introduction

The aim of thispaper is to give the reader an understanding of interactive audio. With anoverview of the challenges, issues, technologies and roles within thediscipline of interactive audio. There is an addition folder with videoexamples that accompany this paper.

The term “interactive” has been widely discussedwithin games. There are some theorists who could argue that.

“a videogame cannot be interactive because it cannot anticipate the actions of itsplayers.” [1]

However for the purposes of this paper the authorwill be using the term interactive in the way it is used with in the gamesindustry and defined by such Practitioners as Karen Collins (2008)

In order to fully understand what interactiveaudio is. We must first, understand the roles in which audio plays within avideo game. In addition to the terminology used within the industry.

2.Roles/types

So what is game audio?

In the article IEZA: A framework for game audioSaunder Huiberts and Richard van Tol (2008) [2] define game audio as: -

“Interactivecomputer game play…the term game audio also applies to sound during certainnon-interactive parts of the game — for instance the introduction movie andcutscenes.”

In additionto this description, the article also highlights two points that the authorsbelieves sum up what game audio should achieve.

1Making the game play experience intense and thrilling.

2 Helpingthe player play the game by providing necessary game play information.

The first statement is backed up with 2 examples[14] from the game Call of Duty black ops. The first example (example 1) showsjust the game play action with no audio. The experience that is felt whencomparing to the second example (example 2) is different in the fact that, byadding audio to the second example, the action becomes more intense andthrilling.

An example of the second statement can be seenin (example 3) [9] the game splinter cell double agent. As the player getscloser to the guards we can hear them before we see them, making the playeraware that you are approaching them and may need to take appropriate action. Inaddition to this the sound feedback information that the player receives overthe radio is also important to achieving the goals as well as making the playeraware of any danger.

There are many sounds that make up a games audiotrack. Also there are many researchers that have tried to explain and give anunderstanding of how these sounds fit within a game.

K, Collins. (n.d.) [4] Suggest a broadcategorization for game audio could lie under the terms: -

-Diegetic – This refers to sounds coming from the realworld environment.

-Non – diegetic – This refers to sounds such as music,stabs, and sound effects not associated with the real world.

It is also suggested that this can be furthercategorized into dynamic and non-dynamic sound before breaking this down evenfurther, into the types of dynamic sounds related to the activity of theplayer.

This gives a brief understanding of game audio,however for more in-depth analysis of the types of game audio. We must look atother researchers that have tried to define this area.

Grimshaw & Schott (2007) [5] propose anexpansion on the terms diegetic and non-diegetic. By breaking these categoriesdown even further by introducing the following terms.

-Telediegetic –“sounds produced by other players but having consequences for one player”

-Ideodiegetic – “immediatesounds, which the player hears”

The section Ideodiegetic again can also becategorized in to two further sub sections: -

-Kinediegetic- “soundstriggered by the players actions”

-Exodiegtic – “allother Ideodiegetic sounds”

Grimshaw& Schott (2007)

Although the above explanations describe thetypes of audio within games, these explanations can become overly complicated.Huiberts & van Tol (2008) [2] created an IEZA (Interface, Effect, Zone,Affect.) framework to show the types of audio in games. (fig. 1)

“Game AudioRules”

StevenMachen

LeedsMetropolitan University

5 EarlsCourt, Doncaster

DN4 5EZ

+44795411901

s.machen4922@student.leedsmet.ac.uk

Abstract

In this paper the author will present a criticalevaluation of the unique challenges presented by interactive audio.

1.Introduction

The aim of thispaper is to give the reader an understanding of interactive audio. With anoverview of the challenges, issues, technologies and roles within thediscipline of interactive audio. There is an addition folder with videoexamples that accompany this paper.

The term “interactive” has been widely discussedwithin games. There are some theorists who could argue that.

“a videogame cannot be interactive because it cannot anticipate the actions of itsplayers.” [1]

However for the purposes of this paper the authorwill be using the term interactive in the way it is used with in the gamesindustry and defined by such Practitioners as Karen Collins (2008)

In order to fully understand what interactiveaudio is. We must first, understand the roles in which audio plays within avideo game. In addition to the terminology used within the industry.

2.Roles/types

So what is game audio?

In the article IEZA: A framework for game audioSaunder Huiberts and Richard van Tol (2008) [2] define game audio as: -

“Interactivecomputer game play…the term game audio also applies to sound during certainnon-interactive parts of the game — for instance the introduction movie andcutscenes.”

In additionto this description, the article also highlights two points that the authorsbelieves sum up what game audio should achieve.

1Making the game play experience intense and thrilling.

2 Helpingthe player play the game by providing necessary game play information.

The first statement is backed up with 2 examples[14] from the game Call of Duty black ops. The first example (example 1) showsjust the game play action with no audio. The experience that is felt whencomparing to the second example (example 2) is different in the fact that, byadding audio to the second example, the action becomes more intense andthrilling.

An example of the second statement can be seenin (example 3) [9] the game splinter cell double agent. As the player getscloser to the guards we can hear them before we see them, making the playeraware that you are approaching them and may need to take appropriate action. Inaddition to this the sound feedback information that the player receives overthe radio is also important to achieving the goals as well as making the playeraware of any danger.

There are many sounds that make up a games audiotrack. Also there are many researchers that have tried to explain and give anunderstanding of how these sounds fit within a game.

K, Collins. (n.d.) [4] Suggest a broadcategorization for game audio could lie under the terms: -

-Diegetic – This refers to sounds coming from the realworld environment.

-Non – diegetic – This refers to sounds such as music,stabs, and sound effects not associated with the real world.

It is also suggested that this can be furthercategorized into dynamic and non-dynamic sound before breaking this down evenfurther, into the types of dynamic sounds related to the activity of theplayer.

This gives a brief understanding of game audio,however for more in-depth analysis of the types of game audio. We must look atother researchers that have tried to define this area.

Grimshaw & Schott (2007) [5] propose anexpansion on the terms diegetic and non-diegetic. By breaking these categoriesdown even further by introducing the following terms.

-Telediegetic –“sounds produced by other players but having consequences for one player”

-Ideodiegetic – “immediatesounds, which the player hears”

The section Ideodiegetic again can also becategorized in to two further sub sections: -

-Kinediegetic- “soundstriggered by the players actions”

-Exodiegtic – “allother Ideodiegetic sounds”

Grimshaw& Schott (2007)

Although the above explanations describe thetypes of audio within games, these explanations can become overly complicated.Huiberts & van Tol (2008) [2] created an IEZA (Interface, Effect, Zone,Affect.) framework to show the types of audio in games. (fig. 1)

This framework gives a good understanding of how theaudio links within a game.

Under the heading diegetic, Zone and effect. Have beensub sectioned.

Zone, refers tonoises such wind, rain and ambience noises that represent the real world or ingame environment. This is often describes by audio designers as ambient orenvironmental sounds.

Effect, refers tosounds linked to diegetic parts of the game either on screen or offscreen. An example of this could be footstep sounds or breathing.

Non- diegetic sound, again this is split into twosections. Affect and interface.

Interface, refers tonon-diegetic sounds outside of the game world environment such as health levelor score.

Affect refers tosounds linked with non-diegetic parts of the environment. Such as musicdesigned for a specific target audience such as a horror game may use adramatic spooky score.

3. Issues

There are many issues relating to game audio, in thisnext section the author will try to outline the numerous issues that could beraised when designing and implementing game audio. In addition to any possiblesalutations that are currently being used to work around such problems.

The main issue audio designer have for games is theneed to fit the majority, if not all the audio into less then 10% of theavailable RAM.

It is suggested that the audio designer mustcompromise one of the following: quality, memory or variation, in order toachieve a good outcome.

It is suggested that when designing audio for games

“You canhave lots of variation with good-quality sounds, but this will take up lots ofmemory”

“You canhave good quality sounds that will fit into memory, but there won’t be muchvariation as we can’t fit many of them in”

“You canhave lots of variation within small amounts of memory, but the quality of oursounds would have to go down”

Stevens, R.& Raybould, D. (2011) [6]

With this in mind it may help to look more in-depthinto each of these areas.

3.1 Samplerates/file sizes

Audio designers will, the majority of the time.Decrease the sample rate of the file. By taking a stereo file that is 1 minutein length recorded at 44.1k, 16 bit and converting it to 11k, 16 bit will save7.5 MB as the file sizes are 10MB and 2.5MB respectively.

The problem however with this method is the decreasedsample rate will effect the sound quality this may not be such a problem whenworking on a mobile platform with small speakers. But when working on a largerplatform when the player could have a very expensive and impressive surroundsound set up could pose a problem. Because who would want to spend all thatmoney just to hear a tinni sound coming out of high quality speakers!

3.2 Filetypes / Compression

Therefore another widely used method is to change thefile type. This way the designer can keep the file at the highest possiblesample rate. Then depending on the file type. i.e. Mp3, OGG, .wav. Etc. Thiswill make the file size smaller due to compression rates of said file types.

Brad Meyer [7] backs this up in a 2011 articlein gamasutra.com.

“Perhaps thesingle most revolutionary breakthrough in digital audio in the past 20 yearswas the invention of high compression, high quality codec’s. Being able tocompress a PCM file to one tenth or one fifteenth its normal size”

Meyer, B. (2007)

One problem that may occur with this method could bethe fact that the engine and platform that you may be working on, might nothandle certain file types.

If the platform does allow this, then there may beother difficulties such as the audio team not having a dedicated audioprogrammer to write the additional code to allow for such changes. Moreoverthis may not be a problem in a large development teams as the audio programmermay just tweak the engine to allow this. However when working in smaller teamswith no dedicated audio programmer this may prove to be more challenging.

3.3Variation

One way in which audio designers achieve variation ingames is to break a large sound down into multiple smaller files then by addingparameters such as randomization and concatenation you can achieve much morevariation. Rather then having to record the sound, multiple times with adifferent outcome.

Under the heading diegetic, Zone and effect. Have beensub sectioned.

Zone, refers tonoises such wind, rain and ambience noises that represent the real world or ingame environment. This is often describes by audio designers as ambient orenvironmental sounds.

Effect, refers tosounds linked to diegetic parts of the game either on screen or offscreen. An example of this could be footstep sounds or breathing.

Non- diegetic sound, again this is split into twosections. Affect and interface.

Interface, refers tonon-diegetic sounds outside of the game world environment such as health levelor score.

Affect refers tosounds linked with non-diegetic parts of the environment. Such as musicdesigned for a specific target audience such as a horror game may use adramatic spooky score.

3. Issues

There are many issues relating to game audio, in thisnext section the author will try to outline the numerous issues that could beraised when designing and implementing game audio. In addition to any possiblesalutations that are currently being used to work around such problems.

The main issue audio designer have for games is theneed to fit the majority, if not all the audio into less then 10% of theavailable RAM.

It is suggested that the audio designer mustcompromise one of the following: quality, memory or variation, in order toachieve a good outcome.

It is suggested that when designing audio for games

“You canhave lots of variation with good-quality sounds, but this will take up lots ofmemory”

“You canhave good quality sounds that will fit into memory, but there won’t be muchvariation as we can’t fit many of them in”

“You canhave lots of variation within small amounts of memory, but the quality of oursounds would have to go down”

Stevens, R.& Raybould, D. (2011) [6]

With this in mind it may help to look more in-depthinto each of these areas.

3.1 Samplerates/file sizes

Audio designers will, the majority of the time.Decrease the sample rate of the file. By taking a stereo file that is 1 minutein length recorded at 44.1k, 16 bit and converting it to 11k, 16 bit will save7.5 MB as the file sizes are 10MB and 2.5MB respectively.

The problem however with this method is the decreasedsample rate will effect the sound quality this may not be such a problem whenworking on a mobile platform with small speakers. But when working on a largerplatform when the player could have a very expensive and impressive surroundsound set up could pose a problem. Because who would want to spend all thatmoney just to hear a tinni sound coming out of high quality speakers!

3.2 Filetypes / Compression

Therefore another widely used method is to change thefile type. This way the designer can keep the file at the highest possiblesample rate. Then depending on the file type. i.e. Mp3, OGG, .wav. Etc. Thiswill make the file size smaller due to compression rates of said file types.

Brad Meyer [7] backs this up in a 2011 articlein gamasutra.com.

“Perhaps thesingle most revolutionary breakthrough in digital audio in the past 20 yearswas the invention of high compression, high quality codec’s. Being able tocompress a PCM file to one tenth or one fifteenth its normal size”

Meyer, B. (2007)

One problem that may occur with this method could bethe fact that the engine and platform that you may be working on, might nothandle certain file types.

If the platform does allow this, then there may beother difficulties such as the audio team not having a dedicated audioprogrammer to write the additional code to allow for such changes. Moreoverthis may not be a problem in a large development teams as the audio programmermay just tweak the engine to allow this. However when working in smaller teamswith no dedicated audio programmer this may prove to be more challenging.

3.3Variation

One way in which audio designers achieve variation ingames is to break a large sound down into multiple smaller files then by addingparameters such as randomization and concatenation you can achieve much morevariation. Rather then having to record the sound, multiple times with adifferent outcome.

Fig.2

Shows one method of achieving variation. Each soundhas been down sampled to achieve the lowest sample rate possible but still highenough quality to achieve realistic results. These sounds are then split intoseparate groups, assigned randomly to play back within that group before beingconcatenated with the remainder of the groups. Therefore one sound will bechosen from each group to play back in a certain order. Variation can also beachieved if we take away the concatenate, leaving just the random.

4.Non-repetitive design

There is an increasing need for non-repetitive audiodesign in today’s games, as levels and maps become larger the player’s urge andneed to explore these levels, as well as the time spent in the environment isincreased. Or simply the player may play the same section of the game over andover.

For the player to be immersed in the game, audiodesigners must find ways to make the environment sound as realistic and asdynamically changing as possible. One way to achieve this is to createnon-repetitive audio design.

5. SoundPropagation

As sound travels through the air as a linear wave,this wave then forces the air molecules to vibrate allowing the sound totravel. However, when these vibrating molecules react with different surfaces,such as the way in which it reflects and diffracts. In addition to the way itfades over time and distance, is known as sound propagation. Therefore insummary, sound propagation is the term used to describe the way in which soundreacts in and around different environments.

This is also backed by Parfit, D. (2005) [8]suggesting.

“Soundpropagation characteristics include attenuation due to distance and air,reflection, obstruction and occlusion”

Therefore to have a greater understanding of soundpropagation we must look at these aforementioned characterizations.

5.1 Attenuation

Shows one method of achieving variation. Each soundhas been down sampled to achieve the lowest sample rate possible but still highenough quality to achieve realistic results. These sounds are then split intoseparate groups, assigned randomly to play back within that group before beingconcatenated with the remainder of the groups. Therefore one sound will bechosen from each group to play back in a certain order. Variation can also beachieved if we take away the concatenate, leaving just the random.

4.Non-repetitive design

There is an increasing need for non-repetitive audiodesign in today’s games, as levels and maps become larger the player’s urge andneed to explore these levels, as well as the time spent in the environment isincreased. Or simply the player may play the same section of the game over andover.

For the player to be immersed in the game, audiodesigners must find ways to make the environment sound as realistic and asdynamically changing as possible. One way to achieve this is to createnon-repetitive audio design.

5. SoundPropagation

As sound travels through the air as a linear wave,this wave then forces the air molecules to vibrate allowing the sound totravel. However, when these vibrating molecules react with different surfaces,such as the way in which it reflects and diffracts. In addition to the way itfades over time and distance, is known as sound propagation. Therefore insummary, sound propagation is the term used to describe the way in which soundreacts in and around different environments.

This is also backed by Parfit, D. (2005) [8]suggesting.

“Soundpropagation characteristics include attenuation due to distance and air,reflection, obstruction and occlusion”

Therefore to have a greater understanding of soundpropagation we must look at these aforementioned characterizations.

5.1 Attenuation

Fig.3

Fig.3 shows a representation of how attenuation workswith a game engine for the purposes of this explanation the engine in questionwill be UDK (Unreal Development Kit).

A - Sound source

B – Maximum loudness of the sound

C – Minimum loudness of the sound

D – Listener

Therefore as the listener (D) moves closer to thesound source and enters the area around section C the sound will be heard at alow level as the listener then moves closer to the sound source the volume willincrease until the listener is at position B where the volume will be at itsmaximum. As this process is reversed and the listener moves from B to C thevolume will then attenuate, decreasing the volume.

5.2Reflection

Fig.3 shows a representation of how attenuation workswith a game engine for the purposes of this explanation the engine in questionwill be UDK (Unreal Development Kit).

A - Sound source

B – Maximum loudness of the sound

C – Minimum loudness of the sound

D – Listener

Therefore as the listener (D) moves closer to thesound source and enters the area around section C the sound will be heard at alow level as the listener then moves closer to the sound source the volume willincrease until the listener is at position B where the volume will be at itsmaximum. As this process is reversed and the listener moves from B to C thevolume will then attenuate, decreasing the volume.

5.2Reflection

Fig.4

Fig.4 shows the reflections of a square room, thesereflections are important for the player to have a realistic sense of theenvironment. In the above figure 4 the direct signal will be unprocessed,attenuation may be added depending on the distance. However it is thereflection of the sounds that is important in this example. Reflection 1, willreverberate from two walls before reaching the listener, therefore the soundwill also be quieter then the direct signal as it has had further distance totravel as well as some of the energy being lost. Reflection 2 will reverberatefrom one wall meaning the sound will be louder then reflection 1 in addition toreaching the listener before reflection 1 but quieter then the direct signal.This all adds to the overall reverb of the environment.

Many game engines have reverb presets this is becausecalculating reverb in real-time can be process heavy on the CPU. These presetscan be used with good results depending on the game. However the sound designermay be using the same reverb preset multiple times for slightly differentenvironments and this may start to become too familiar to thelistener.

5.3Obstruction

Fig.4 shows the reflections of a square room, thesereflections are important for the player to have a realistic sense of theenvironment. In the above figure 4 the direct signal will be unprocessed,attenuation may be added depending on the distance. However it is thereflection of the sounds that is important in this example. Reflection 1, willreverberate from two walls before reaching the listener, therefore the soundwill also be quieter then the direct signal as it has had further distance totravel as well as some of the energy being lost. Reflection 2 will reverberatefrom one wall meaning the sound will be louder then reflection 1 in addition toreaching the listener before reflection 1 but quieter then the direct signal.This all adds to the overall reverb of the environment.

Many game engines have reverb presets this is becausecalculating reverb in real-time can be process heavy on the CPU. These presetscan be used with good results depending on the game. However the sound designermay be using the same reverb preset multiple times for slightly differentenvironments and this may start to become too familiar to thelistener.

5.3Obstruction

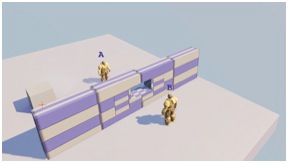

Fig.5

Fig.5 shows the sound source “A” with a listener “B”separated by a wall with open spaces at either side. When sound travels betweenpoints A and B the sound will be obstructed. Therefore sound between theobstructions will be unfiltered, however sounds behind the obstructions shouldbe filtered and attenuated depending on the surface type, texture and size.

5.4Occlusion

Fig.5 shows the sound source “A” with a listener “B”separated by a wall with open spaces at either side. When sound travels betweenpoints A and B the sound will be obstructed. Therefore sound between theobstructions will be unfiltered, however sounds behind the obstructions shouldbe filtered and attenuated depending on the surface type, texture and size.

5.4Occlusion

Fig.6

Fig.6 shows the listener “A” and the sound source “B”with a solid wall between the two points. Therefore direct sound will not beheard. Depending on the thickness of the wall, any sound that passes throughthis wall will need to be filtered and attenuated.

5.5Exclusion

Fig.6 shows the listener “A” and the sound source “B”with a solid wall between the two points. Therefore direct sound will not beheard. Depending on the thickness of the wall, any sound that passes throughthis wall will need to be filtered and attenuated.

5.5Exclusion

Fig.7

Fig.7 shows a sound source “A” and a listener “B”separated by a wall with a small open window. Ignoring the fact that thelistener is stood in the same room. And imaging the two sources were inseparate rooms. The direct sound will be clear, however the reflections ofthese sounds will be muffled due to the geometry of the wall.

6.Subjective sound and mixing

To make a game sound aesthetically pleasing, just likea record that has been mixed and mastered the final mix of a game must be mixedto high standard. However unlike mixing for the music industry that applies tothe red book standard, as yet there is no final standard of quality that thegames industry must abide by. In addition to this there are many more variablesthat the audio designer must address when mixing for games some of which arehighlighted in the section below.

6.1 Radius

In a 2010 article by Rob Bridgett [15] forgamasutra.com. Mellroth, K. (2010) discuses the impotence of mixing and suggests“radius is as, or more important than, volume modifiers”

6.2 Dynamicrange

The article also discuses the dynamic range byfiguring out the loudest and quietest sounds then all other sounds are rankedbetween the two. An example of which is given in the game Fable II. Mellroth,K. (2010) [15] states that the Troll slam attack is the loudest sound heardtherefore even the gunshot should be quieter.

6.3 Software Tools

Each audioengine will have its own structure for mixing; an example of this is with Fmod,this software has been used to mix games such as Heavenly sword (2007) andLittle Big Planet (2008)

With Fmod the audio designer has the ability to createa hierarchy structure of sounds with parent and child snapshots the childsnapshots can override the parent snapshots with each bus having its volume andpitch values altered in real-time.

Wwise also offers a similar mixing hierarchy with theaddition of passive mixing, which is achieved by effects such as peak limitingand auto-ducking. In addition to the active system, which works like Fmodssystem, described previously.

6.4 Emotional response vs. realism

Theemotional response, that the player receives from the game, can be determinedby the audio designer during the mix stage. This is an integral part of theoverall sound of the game.

If the audiodesigner decides to duck a certain sound, increase the ambience or footsteps orcut them out completely, each mix will achieve a different emotional responsefrom the player. This is what the audio designer must get right in order toachieve the best outcome for the game.

So shouldthe player hear someone else reloading?

Mellroth, K.(2010) [15] was asked a similar question in a gamasutra.com article and stated.

“Wewrestle with these questions and sometimes make compromises”

It could be suggested that the needs of the game i.e.the overall experience of the sound, and emotional feedback could out way therealism of a natural soundscape.

7. Music

Writing and implementing music for games is a verycomplex procedure.

Depending on the developer or desired outcome of thegame. The composer may be responsible for composing the music to fit the actionor, the audio designer may be responsible for the choice of implementation, sothat the music will transition well with the action. Whether it is arranging tobars and beats or cross fading and layering, both the composer and audiodesigner must understand each other’s work to be able to convincingly pull offsuch a complex concept.

One commonly used technique used to implement music isto use vertical or horizontal layering. These layers will call up eachindividual stem when needed allowing for various mixes. Below is an example ofa layered music system within the UDK engine.

Fig.7 shows a sound source “A” and a listener “B”separated by a wall with a small open window. Ignoring the fact that thelistener is stood in the same room. And imaging the two sources were inseparate rooms. The direct sound will be clear, however the reflections ofthese sounds will be muffled due to the geometry of the wall.

6.Subjective sound and mixing

To make a game sound aesthetically pleasing, just likea record that has been mixed and mastered the final mix of a game must be mixedto high standard. However unlike mixing for the music industry that applies tothe red book standard, as yet there is no final standard of quality that thegames industry must abide by. In addition to this there are many more variablesthat the audio designer must address when mixing for games some of which arehighlighted in the section below.

6.1 Radius

In a 2010 article by Rob Bridgett [15] forgamasutra.com. Mellroth, K. (2010) discuses the impotence of mixing and suggests“radius is as, or more important than, volume modifiers”

6.2 Dynamicrange

The article also discuses the dynamic range byfiguring out the loudest and quietest sounds then all other sounds are rankedbetween the two. An example of which is given in the game Fable II. Mellroth,K. (2010) [15] states that the Troll slam attack is the loudest sound heardtherefore even the gunshot should be quieter.

6.3 Software Tools

Each audioengine will have its own structure for mixing; an example of this is with Fmod,this software has been used to mix games such as Heavenly sword (2007) andLittle Big Planet (2008)

With Fmod the audio designer has the ability to createa hierarchy structure of sounds with parent and child snapshots the childsnapshots can override the parent snapshots with each bus having its volume andpitch values altered in real-time.

Wwise also offers a similar mixing hierarchy with theaddition of passive mixing, which is achieved by effects such as peak limitingand auto-ducking. In addition to the active system, which works like Fmodssystem, described previously.

6.4 Emotional response vs. realism

Theemotional response, that the player receives from the game, can be determinedby the audio designer during the mix stage. This is an integral part of theoverall sound of the game.

If the audiodesigner decides to duck a certain sound, increase the ambience or footsteps orcut them out completely, each mix will achieve a different emotional responsefrom the player. This is what the audio designer must get right in order toachieve the best outcome for the game.

So shouldthe player hear someone else reloading?

Mellroth, K.(2010) [15] was asked a similar question in a gamasutra.com article and stated.

“Wewrestle with these questions and sometimes make compromises”

It could be suggested that the needs of the game i.e.the overall experience of the sound, and emotional feedback could out way therealism of a natural soundscape.

7. Music

Writing and implementing music for games is a verycomplex procedure.

Depending on the developer or desired outcome of thegame. The composer may be responsible for composing the music to fit the actionor, the audio designer may be responsible for the choice of implementation, sothat the music will transition well with the action. Whether it is arranging tobars and beats or cross fading and layering, both the composer and audiodesigner must understand each other’s work to be able to convincingly pull offsuch a complex concept.

One commonly used technique used to implement music isto use vertical or horizontal layering. These layers will call up eachindividual stem when needed allowing for various mixes. Below is an example ofa layered music system within the UDK engine.

Fig.8

Fig.8 shows a simple vertically layered music system.As the player triggers the first layer all other layers will also play, keepingthe music in time. This allows the player to switch between any instrumentswithin the track, depending on the actions taken within the game. Thistechnique can be used in many audio engines such as Fmod, Wwise and UDK.

8.Algorithms

The term “algorithms” on its own is meaningless toaudio designers and is often thought of as a set of programmatic instructionsto achieve an output value in as few steps as possible. However Farnell (2007)[16] suggests that algorithmic sound is not interested in the final value butthe steps in which it takes to achieve the final result (pp.4)

“Algorithmicsound tends to refer to the data, like that from a sequencer”

Farnell (2007)

This could be suggested that algorithmic audio couldbe used as another tool to achieve game audio design. Farnell sums this up byintroducing the term procedural audio.

“Proceduralaudio is non-linear, often synthetic sound, created in real time according to aset of programmatic rules and live input”

Farnell (2007)

8.1 So whatis procedural audio?

Veneri, O. et al (n.d.) [17] give an insight into thetypes of sound that is achievable by using procedural audio. This is brokendown into two categorize. Firstly procedural sound, this consists ofsynthesizing sounds such as solid objects, water, air, fire, footsteps andbirds sounds.

The second category described by Veneri, O. et al(n.d.) is procedural music an example of this technique can be seen in thevideo game spore (2008) [13]. This game uses a specially developed in-housetool that was replicated from the open source project tool Pure Data(PD).

Procedural audio has its advantages and disadvantagesthese must be weighed up before deciding to use this technique when designingaudio for games.

8.2Advantages

Firstly, here we look at the advantages of usingprocedural audio. Using procedural audio can offer a high dynamic and flexiblewill generate sounds in real time with unlimited variety. Unlike a sampledpiece of audio that plays back the same sound over and over. Procedural audiocan have real-time parameters applied. Thus decreasing the chance ofrepetition. Also increasing the level of detail that can be applied to a soundas more parameters can be adjusted by using procedural audio.

8.3Disadvantages

The disadvantages of using procedural audio could outway the positives, as there is an increase in CPU cost. In addition to thisprocedural audio can be time consuming to implement. Also not all sounds couldbe suitable to use, as more complex sounds are harder to synthesis and to makesound, natural. Procedural audio also needs specialist audio engines to work andnot all developers will have the resources available.

9. Looking forward

It is extremely difficult to predict the future ofgame audio. It is suggested that model synthesis will become increasingly moreabundant within games for example the use of procedural audio and systems suchas Wwise wind and Wwise woosh. This will allow games to have more varietywithout the need of recording addition sounds, freeing up the valuable spaceneeded.

However with the increase of RAM, hardware, diskavailability and more recently cloud gaming. Some developers may choose toincrease the allocation size available to game audio. Allowing higher samplerates which in turn delivers a cleaner more dynamic sound to thelistener.

One recent technique that is currently being appliedto the battlefield bad company titles is the use of High Dynamic Range (HDR)mixing system [18]. This system uses a window with a set minimum and maximumoutput value that constantly moves filtering out any sounds below the minimumthreshold.

The above techniques will vary dependent on areas suchas the platform and genre, the developer and desired outcome. We will however,possibly see an increased use in all the above techniques being applied tofuture games.

References

[1] Collins, K. (2008) Game Sound: An Introduction to the History, Theory and Practice of Video Game Music and SoundDesign. London MIT Press pp. 3

[2] Huiberts, S. & Van Tol, R. (2008) IEZA: A framework for Game Audio<http://www.gamasutra.com/view/feature/3509/ieza_a_framework_for_game_audio.phphttp://www.gamasutra.com/view/feature/3509/ieza_a_framework_for_game_audio.php>[Accessed 15 th November 2011]

[3] Huiberts, S. (2010) Captivating sound:The role of audio for immersion in videogames.

[4] Collins, K. (n.d) AnIntroduction to the Participatory and Non-Linear Aspects of Video Game Audio.

[5] Grimshaw, M. & Schott, G. (n.d.)Situating Gaming as a Sonic Experience: Theacoustic ecology of First-Person Shooters pp.467

[6] Stevens, R. & Raybould, D.(2011) The Game Audio Tutorial APractical Guide to Sound and Musicfor Interactive Games. London, Focal Press pp.36.

[7] Meyer, B. (2011) AAA-LiteAudio: Design Challenges AndMethodologies To Bring Console-Quality Audio To Browser And Mobile Games. <http://www.gamasutra.com/view/feature/6393/aaalite_audio_design_challenges_.php?page=2www.gamasutra.com/view/feature/6393/aaalite_audio_design_challenges_.php?page=2>[Accessed 15 th November 2011]

[8] Parfit, D. (2005) InteractiveSound, Environment, and Music Design for a 3D immersive Video Game. 17 August,New York pp.4

[9] Splinter Cell Double Agent PCGameplay Mission 7 Cozumel - Cruiseship Part 1 of 2 (2011) uploaded by engelbrutus <http://www.youtube.com/watch?v=wqGZ61-mtL8&feature=relatedhttp://www.youtube.com/watch?v=wqGZ61-mtL8&feature=related[Accessed 20 th November 2011]

[10] Heavenly Sword (2007) NinjaTheory, Sony Computer entertainment Inc.

[11] Little Big Planet (2008) MediaMolecule, Sony Computer entertainment Inc.

[13] Spore (2008) Maxis,Electronic arts.

[14] Call of Duty Black Ops (2010)Treyarch, Activision.

[15] Bridgett, R. (2010) The GameAudio Mixing Revolution <http://www.gamasutra.com/view/feature/4055/the_game_audio_mixing_revolution.php?print=1>[Accessed 20 th November 2011]

[16] Farnell, A. (2007) An Introduction to procedural audio and its application incomputer games. 23 September .Veneri,

[17] O., Gros, S. & Natkin, S (n.d.) Procedural Audio using GAF

[18] Miguel, I. (2010) Battlefield Bad Company 2 – Exclusive Interview with AudioDirector Stefan Strandberg<http://designingsound.org/2010/03/battlefield-bad-company-2-exclusive-interview-with-audio-director-stefan-strandberg/http://designingsound.org/2010/03/battlefield-bad-company-2-exclusive-interview-with-audio-director-stefan-strandberg/>[Accessed November 21 st 2011]

Fig.8 shows a simple vertically layered music system.As the player triggers the first layer all other layers will also play, keepingthe music in time. This allows the player to switch between any instrumentswithin the track, depending on the actions taken within the game. Thistechnique can be used in many audio engines such as Fmod, Wwise and UDK.

8.Algorithms

The term “algorithms” on its own is meaningless toaudio designers and is often thought of as a set of programmatic instructionsto achieve an output value in as few steps as possible. However Farnell (2007)[16] suggests that algorithmic sound is not interested in the final value butthe steps in which it takes to achieve the final result (pp.4)

“Algorithmicsound tends to refer to the data, like that from a sequencer”

Farnell (2007)

This could be suggested that algorithmic audio couldbe used as another tool to achieve game audio design. Farnell sums this up byintroducing the term procedural audio.

“Proceduralaudio is non-linear, often synthetic sound, created in real time according to aset of programmatic rules and live input”

Farnell (2007)

8.1 So whatis procedural audio?

Veneri, O. et al (n.d.) [17] give an insight into thetypes of sound that is achievable by using procedural audio. This is brokendown into two categorize. Firstly procedural sound, this consists ofsynthesizing sounds such as solid objects, water, air, fire, footsteps andbirds sounds.

The second category described by Veneri, O. et al(n.d.) is procedural music an example of this technique can be seen in thevideo game spore (2008) [13]. This game uses a specially developed in-housetool that was replicated from the open source project tool Pure Data(PD).

Procedural audio has its advantages and disadvantagesthese must be weighed up before deciding to use this technique when designingaudio for games.

8.2Advantages

Firstly, here we look at the advantages of usingprocedural audio. Using procedural audio can offer a high dynamic and flexiblewill generate sounds in real time with unlimited variety. Unlike a sampledpiece of audio that plays back the same sound over and over. Procedural audiocan have real-time parameters applied. Thus decreasing the chance ofrepetition. Also increasing the level of detail that can be applied to a soundas more parameters can be adjusted by using procedural audio.

8.3Disadvantages

The disadvantages of using procedural audio could outway the positives, as there is an increase in CPU cost. In addition to thisprocedural audio can be time consuming to implement. Also not all sounds couldbe suitable to use, as more complex sounds are harder to synthesis and to makesound, natural. Procedural audio also needs specialist audio engines to work andnot all developers will have the resources available.

9. Looking forward

It is extremely difficult to predict the future ofgame audio. It is suggested that model synthesis will become increasingly moreabundant within games for example the use of procedural audio and systems suchas Wwise wind and Wwise woosh. This will allow games to have more varietywithout the need of recording addition sounds, freeing up the valuable spaceneeded.

However with the increase of RAM, hardware, diskavailability and more recently cloud gaming. Some developers may choose toincrease the allocation size available to game audio. Allowing higher samplerates which in turn delivers a cleaner more dynamic sound to thelistener.

One recent technique that is currently being appliedto the battlefield bad company titles is the use of High Dynamic Range (HDR)mixing system [18]. This system uses a window with a set minimum and maximumoutput value that constantly moves filtering out any sounds below the minimumthreshold.

The above techniques will vary dependent on areas suchas the platform and genre, the developer and desired outcome. We will however,possibly see an increased use in all the above techniques being applied tofuture games.

References

[1] Collins, K. (2008) Game Sound: An Introduction to the History, Theory and Practice of Video Game Music and SoundDesign. London MIT Press pp. 3

[2] Huiberts, S. & Van Tol, R. (2008) IEZA: A framework for Game Audio<http://www.gamasutra.com/view/feature/3509/ieza_a_framework_for_game_audio.phphttp://www.gamasutra.com/view/feature/3509/ieza_a_framework_for_game_audio.php>[Accessed 15 th November 2011]

[3] Huiberts, S. (2010) Captivating sound:The role of audio for immersion in videogames.

[4] Collins, K. (n.d) AnIntroduction to the Participatory and Non-Linear Aspects of Video Game Audio.

[5] Grimshaw, M. & Schott, G. (n.d.)Situating Gaming as a Sonic Experience: Theacoustic ecology of First-Person Shooters pp.467

[6] Stevens, R. & Raybould, D.(2011) The Game Audio Tutorial APractical Guide to Sound and Musicfor Interactive Games. London, Focal Press pp.36.

[7] Meyer, B. (2011) AAA-LiteAudio: Design Challenges AndMethodologies To Bring Console-Quality Audio To Browser And Mobile Games. <http://www.gamasutra.com/view/feature/6393/aaalite_audio_design_challenges_.php?page=2www.gamasutra.com/view/feature/6393/aaalite_audio_design_challenges_.php?page=2>[Accessed 15 th November 2011]

[8] Parfit, D. (2005) InteractiveSound, Environment, and Music Design for a 3D immersive Video Game. 17 August,New York pp.4

[9] Splinter Cell Double Agent PCGameplay Mission 7 Cozumel - Cruiseship Part 1 of 2 (2011) uploaded by engelbrutus <http://www.youtube.com/watch?v=wqGZ61-mtL8&feature=relatedhttp://www.youtube.com/watch?v=wqGZ61-mtL8&feature=related[Accessed 20 th November 2011]

[10] Heavenly Sword (2007) NinjaTheory, Sony Computer entertainment Inc.

[11] Little Big Planet (2008) MediaMolecule, Sony Computer entertainment Inc.

[13] Spore (2008) Maxis,Electronic arts.

[14] Call of Duty Black Ops (2010)Treyarch, Activision.

[15] Bridgett, R. (2010) The GameAudio Mixing Revolution <http://www.gamasutra.com/view/feature/4055/the_game_audio_mixing_revolution.php?print=1>[Accessed 20 th November 2011]

[16] Farnell, A. (2007) An Introduction to procedural audio and its application incomputer games. 23 September .Veneri,

[17] O., Gros, S. & Natkin, S (n.d.) Procedural Audio using GAF

[18] Miguel, I. (2010) Battlefield Bad Company 2 – Exclusive Interview with AudioDirector Stefan Strandberg<http://designingsound.org/2010/03/battlefield-bad-company-2-exclusive-interview-with-audio-director-stefan-strandberg/http://designingsound.org/2010/03/battlefield-bad-company-2-exclusive-interview-with-audio-director-stefan-strandberg/>[Accessed November 21 st 2011]